Dependable AI

Tabbed contents

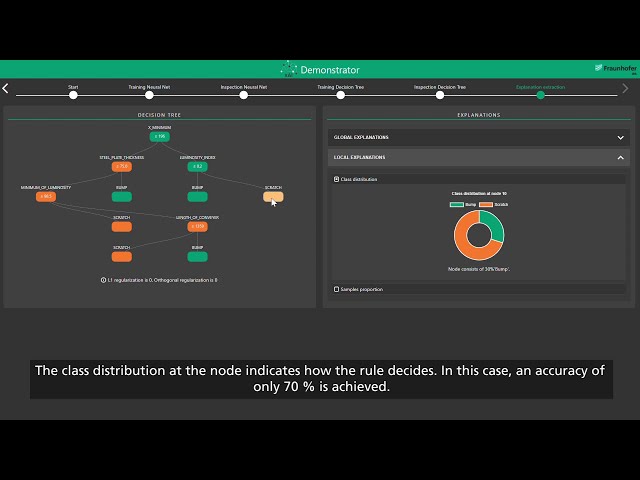

Explainable AI

An explainable artificial intelligence (AI) is an AI whose inner method of function and decision-making are comprehensible. This makes it is possible, for example, to identify important features of input data for decision making, to understand the inner logic or, in the case of a specific decision, to find out why it was made in this way.

Research questions

- How can global explainability be achieved for convolutional neural networks?

- How can understandable global explanations for deep neural networks be generated that guarantee comprehensive coverage of the input space?

- Is it possible to define a process which provides a standard procedure for extracting and interpreting explanations and deriving recommendations for action?

- How must explanations be structured, represented and, if necessary, combined, in order to acquire the maximum amount of knowledge?

Practical relevance

Many modern AI procedures are so-called black boxes. The need for explainability increases as the use of AI methods in key processes becomes more common. Explainability can help to better assess the working methods and limitations of AI models and thus to draw conclusions about the underlying processes and increase acceptance of AI by the workforce.

In addition, in order to assess the risk of productive use of AI methods, explainability is sometimes necessary or required by law, particularly in the case of regulatory and safety-critical applications.

xAI Demonstrator

Privacy warning

With the click on the play button an external video from www.youtube.com is loaded and started. Your data is possible transferred and stored to third party. Do not start the video if you disagree. Find more about the youtube privacy statement under the following link: https://policies.google.com/privacyVERIFICATION

Verifiable AI refers to methods which assess the safety aspects and robustness of an existing AI model. In most cases, mathematical methods are used; these ensure that a tested attribute of the AI can be evaluated with absolute certainty. For example, features such as the robustness of the AI with regard to malfunctions or the range of possible output results can be examined.

Research questions

- How can AI models be verified efficiently and reliably?

- How can abstract safety rules be translated into concrete verification requests?

- How can AI models be designed and taught to meet specified safety requirements?

Practical relevance

Verifiable AI plays a major role in applications that have to meet strict safety standards. Examples of this include collaborative robots, automated guided vehicles or intelligent machine tools. Clear norms or industry standards often apply, which the AI application used does not automatically comply with. In such cases, verifiable AI offers the possibility of testing and verifying appropriate boundary conditions. As a result, AI procedures can be used in processes that previously had to be performed manually or using inflexible rule-based systems due to strict regulatory requirements.

Uncertainty Quantification

In many areas where artificial intelligence (AI) is used, accuracy and rigid decisions are often not sufficient. Uncertainty quantification plays a crucial role in making AI applications robust and reliable. The aim is to enable algorithms to use measurement uncertainties in processes or data directly, thus improving decision-making. In addition, planning and forecasting can be more effective and reliable in situations governed by inherent uncertainty.

Research questions

- Which methods can be used to propagate and process uncertainties in the context of AI?

- How can uncertainty quantification be carried out efficiently and yet as accurately as possible?

- How can the quantification of uncertainties be used effectively for planning and decision-making purposes?

Practical relevance

Uncertainty quantification is primarily used in applications where safety is paramount, such as human-robot collaboration, but also in areas such as manufacturing, logistics or machine control, where errors often result in high costs. For instance, a robot in an assembly cell can provide preventive feedback if it is unable to fully understand the current situation, and the anomaly detection software in quality control can mark parts which have an anomaly detection result that is unclear. Uncertainty quantification in AI applications can thus considerably improve their suitability for use in practice.

STANDARDIZATION

Today, norms and standards assume a multitude of essential tasks. They support the development of safe products by reflecting the current state of research, therefore giving the customer faith in modern technologies. At the same time, norms and standards give manufacturers legal certainty when a new product is launched on the market. Ultimately, the same must apply for products that use artificial intelligence (AI). However, so far, a standard for intelligent machines and products does not exist.

Research questions and practical relevance

Within the scope of the standardization roadmap of the German Institute for Standardization (DIN) and the expert council of the Association for Electrical, Electronic & Information Technologies (VDE), Fraunhofer IPA is laying the foundation for such a standard. In the process, it has become clear that artificial intelligence varies significantly from classical software and must therefore be given special consideration.

In particular, aspects such as determining the necessary degree of safety, the specifics of industrial automation and medicine, and ethical issues must be considered in relation to the need for safety, the ability to promote innovation and economic efficiency.

A further focus of the standardization roadmap is on how to determine and ultimately certify the quality of an intelligent system. Methods of Explainable AI (xAI), such as those being researched at the Fraunhofer Center for Cyber-Cognitive Intelligence, play a decisive role in this regard.